Just a few years ago, at the dawn of the AI era, an advisory firm announced that it could make "expert AI-driven forecasts" and on its website proudly billed itself as the "first regulated AI financial advisor."

The SEC was watching. In March 2024, the regulator announced that San Francisco-based Global Predictions, along with another firm, Toronto-based Delphia, would pay a combined $400,000 in fines for falsely claiming to use artificial intelligence.

"

Given the current state of regulatory play, firms must strike a balance between tech innovation and investor protection.

For financial advisors, the lesson is clear: The rush to adopt AI-infused wealthtech tools must be tempered by the regulatory and reputational risks it brings. When outputs can't be explained, accountability becomes a casualty. In financial services, that's an unaffordable risk.

READ MORE:

These regulatory signals aren't just box-ticking exercises; they reflect deeper concerns about how AI may shape client outcomes, firm reputation and market stability. Consider these six approaches to implementing and monitoring AI tools in a turbulence-free way.

Avoid AI washing

Misleading clients or regulators about AI capabilities is a short road to sanctions.

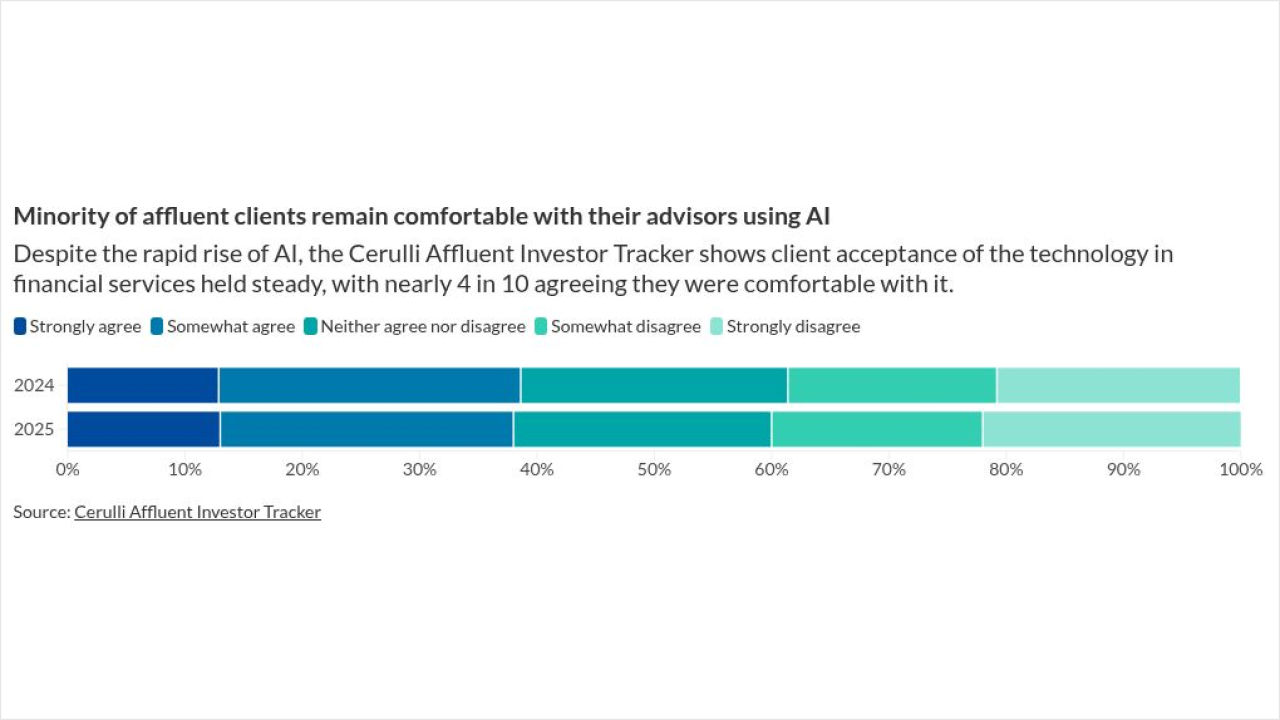

If you don't use AI, don't claim you do. Regulators expect substance over slogans. Avoiding hype and embracing clarity also builds credibility with clients who are increasingly skeptical of tech promises.

Strengthen what already works

Current compliance processes already address some AI risks. According to FINRA guidelines, low-risk use cases — such as using gen AI to summarize public documents — don't require extensive reviews. Don't reinvent the wheel.

Say no to gen AI (selectively)

Create actionable policies on where not to use gen AI.

Some large financial institutions, including Bank of America, have barred the use of ChatGPT due to privacy and accuracy concerns. Saying "no gen AI here" for sensitive functions reduces risk and affirms human expertise.

READ MORE:

Erect guardrails

Building guardrails into your AI lifecycle from day one will pay off as usage scales. Ensure risk exposure is acceptable. For example, Morgan Stanley limits GPT-4 tools to internal use with proprietary data only, thereby keeping risk low, compliance high and advisors firmly in control.

To keep performance reliable and to limit risk, it's also important to define model selection and monitoring protocols. In other words, ensure the right AI model is chosen and continually checked for accuracy, bias and concept or data drift.

To that end, wealth management firms should implement

READ MORE:

Vet vendors

Both FINRA and the SEC are

Assess AI materiality

This simply means evaluating how significant or impactful AI is to your business financially, operationally and strategically. On the ground, this means asking, "If this AI system fails, drifts or makes a wrong call, how much does it really matter to our firm?"

Treat AI like any other high-stakes asset, like your firm's credit or employee health care decisions. Regulators recommend tailoring AI oversight based on risk: Let low-impact models move quickly — but add controls for high-stakes decisions.

AI is a once-in-a-generation opportunity for wealth management, but it will always need something far older to guide it: human judgment. In the rush to adopt the technology, FOMO is rampant, pushing firms into regulatory hazard zones. In an era of algorithmic advice, client trust depends on strong governance and human judgment.

Winning in AI doesn't just mean having the most advanced model; it means knowing when and how to apply it responsibly. It's in the space between speed and sense that the real competitive edge lies for wealth management.