The CFP Board has convened a new AI Working Group to address the technology's place in the financial planning industry.

The group first met Tuesday and Wednesday at the organization's headquarters in Washington, D.C. The roster includes

This working group was launched in an effort "to explore how technology can elevate financial planning while strengthening the human relationships at its core," said K. Dane Snowden, chief operating officer of the CFP Board.

In February, the CFP Board released its

READ MORE:

A staff report will be delivered to the CFP Board by the end of the year, drawing from the working group's insights. As a part of this, Snowden said they will also assess whether this guidance needs to evolve.

"During the meeting, the working group explored emerging AI trends across and beyond the financial sector, focusing on real-world use cases — from client experience to advisor workflows to strategies for safeguarding public trust," he said.

While the group doesn't take policy positions, it did review more than 600 state and federal AI-related bills during the two-day meeting, said Snowden.

"Our goal is to help the profession prepare for a range of possible future scenarios, including those involving new regulations," he said.

The importance of clear guidelines

While the CFP Board drafts its staff report and state and federal regulations take shape, many firms have taken the initiative to develop their own internal AI policies.

Transparency regarding the use of AI, compliance with applicable regulatory rules and privacy protection regarding data used with AI systems are all important issues, said Chris Blotto, senior vice president and chief digital and information officer at Commonwealth Financial Network.

Commonwealth established a Generative AI Working Group in 2023 with employees representing data, legal, compliance, information security, third-party management and technology, said Blotto. The first set of guidelines for advisors and employees came out in mid-2023. Since that time, he said the working group has updated policies and guidelines twice to reflect the rapid expansion of AI tools and use by employees and advisors. Updates occurred in mid-2024 and in April.

"Our policies and guidelines were created to address key risks: privacy, cybersecurity, bias, regulatory and reputational, as well as limitations inherent in current AI systems," he said.

READ MORE:

It would be helpful if the CFP Board group can provide AI best practices for advisors and firms, said Blotto.

"There are no new regulations regarding AI specifically, so the need is to ensure compliance with all existing rules and regulations," he said. "AI tools and potential use cases create open questions regarding how to increase efficiencies while not losing sight of regulatory rules, fiduciary standards and client expectations."

For advisors who don't embrace AI, there is a strong chance they will see a significant falloff in client perceived value over the next 15 years, said Bill Shafransky, senior wealth advisor at

READ MORE:

"If you're not taking advantage of technologies like OpenAI, FP Alpha or even a

Top issues to address

The CFP Board Working Group must address not just future applications and trends, but also legal and ethical limits to AI in financial planning — particularly where this intersects with estate planning, fiduciary standards and confidentiality, said William "Bill" London, partner at the law office of

"Estate planning is so very personal and involves such risk-filled decision-making that demands human judgment, emotional nuance and discretion — none of which can be reasonably replicated by AI," he said.

There is also the issue of whether the provision of legal or financial guidance by AI is a step over the boundary into the unauthorized practice of law or a breach of fiduciary responsibilities, said London. In California, for example, there are strict requirements for who is qualified to offer legal guidance, and AI systems are not yet qualified as licensed and accountable actors.

"Use by companies of AI in financial or legal processes with little or even no review by a human can expose companies to liability, especially if errors harm the clients or the client is unaware that AI contributed to formulating a plan or proposal," he said. "Ethical guidelines must therefore prioritize transparency — customers must be clearly told whether or not AI is engaged in the recommendations they're offered. And whatever adviser or attorney is employing these systems is going to be ultimately accountable for reviewing and signing off on what they produce."

One critical area is how AI-enabled tools influence risk profiling, product suitability and fiduciary decision-making, said Guy Gresham, a sustainability advisor at

"Financial advisors sit at the intersection of trust and technology — clients need to understand not just the advice, but how it was generated," he said. "From an investor relations and disclosure standpoint, the same scrutiny applied to public companies should extend to advisory practices: transparency in AI usage, underlying assumptions and potential bias should all be front and center."

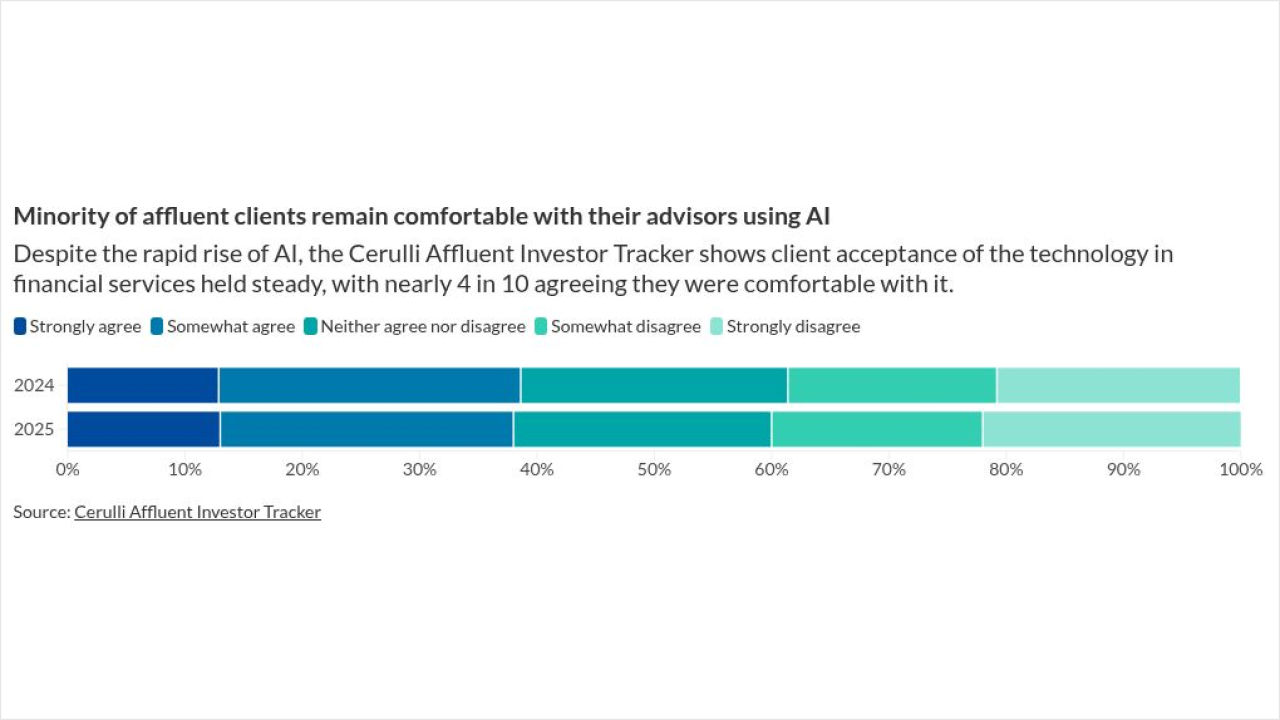

Financial planners increasingly rely on AI tools to enhance client service — but few clients fully understand how these tools shape advice.

"Ethical practice demands clear, plain-language disclosures that explain how AI is being used, where human judgment fits in and what limitations exist," he said. "In capital markets, we're already seeing investor demand for transparency in AI-generated disclosures and ESG ratings — financial planning should follow suit to uphold client trust and autonomy."